Pigz is basically parallel gzip, to take advantage of multiple cores. When you’ve got massive files, this can be a pretty big advantage, especially when you’ve got lots of cores sitting around.

Taking a 418m squid access log file, on a dual-quad Nehalem L5520 with HyperThreading turned on:

[jallspaw@server01 ~]$ ls -lh daemon.log.2; time gzip ./daemon.log.2 ; ls -lh ./daemon.log.2.gz

-rw-r—– 1 jallspaw jallspaw 418M Apr 2 19:18 daemon.log.2

real 0m12.398s

user 0m12.107s

sys 0m0.288s

-rw-r—– 1 jallspaw jallspaw 45M Apr 2 19:18 ./daemon.log.2.gz

…now gunziping it:

[jallspaw@server01 ~]$ ls -lh daemon.log.2.gz; time gunzip ./daemon.log.2 ; ls -lh ./daemon.log.2

-rw-r—– 1 jallspaw jallspaw 45M Apr 2 19:18 daemon.log.2.gz

real 0m3.245s

user 0m2.693s

sys 0m0.552s

-rw-r—– 1 jallspaw jallspaw 418M Apr 2 19:18 ./daemon.log.2

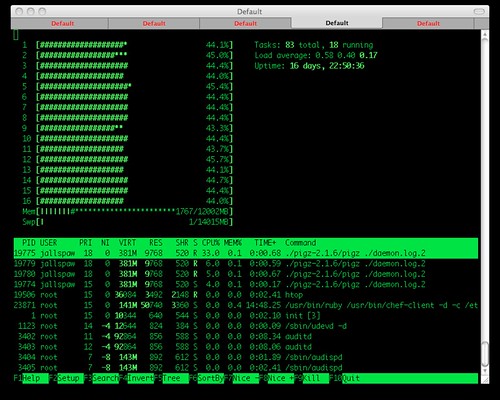

htop looks like this when this is happening:

(Note the freeloading/lazy 15 cores sitting around watching its friend core #10 sweating)

…now pigz’ing it:

[jallspaw@server01 ~]$ ls -lh daemon.log.2; time ./pigz-2.1.6/pigz ./daemon.log.2 ; ls -lh ./daemon.log.2.gz

-rw-r—– 1 jallspaw jallspaw 418M Apr 2 19:18 daemon.log.2

real 0m1.569s

user 0m23.092s

sys 0m0.422s

-rw-r—– 1 jallspaw jallspaw 45M Apr 2 19:18 ./daemon.log.2.gz

…now unpigz’ing it:

[jallspaw@server01 ~]$ ls -lh daemon.log.2.gz; time ./pigz-2.1.6/unpigz ./daemon.log.2.gz ; ls -lh ./daemon.log.2

-rw-r—– 1 jallspaw jallspaw 45M Apr 2 19:18 daemon.log.2.gz

real 0m1.456s

user 0m1.861s

sys 0m0.867s

-rw-r—– 1 jallspaw jallspaw 418M Apr 2 19:18 ./daemon.log.2

and htop looks like this when it’s happening:

which do you like better?

Seems nice improvement in total run time.

isn’t load average pretty high with pigz?

Denish

For the 1.55 seconds, yeah the load average I think went above 1. 🙂 The load average will definitely go up because of the number of simultaneous jobs running.

Excellent boost. Makes me wonder if several of the GNU utils need a refresh for parallel opportunities?

Also parallel bzip2

http://compression.ca/pbzip2/

ta

Alex

It’d be interesting to see how long each method takes to gzip 16 files. I suspect for most of us the problem is not compressing one log file – but compressing the 1000’s generated every hour.

Alex

So how’s the file format compatibility with gzip, each way?

Alex: in our case, we have some database backups that are massive, but yeah assuming there’s some recursive compression going on many many files, that would be interesting to see.

David: Looks good:

[jallspaw@server01 ~]$ ./pigz-2.1.6/pigz daemon.log.2

[jallspaw@server01 ~]$ gunzip ./daemon.log.2.gz

[jallspaw@server01 ~]$ gzip ./daemon.log.2

[jallspaw@server01 ~]$ ./pigz-2.1.6/unpigz ./daemon.log.2.gz

[jallspaw@server01 ~]$

Database backups are a sweet spot for me, too. When I need to set up a new MySQL replication slave of an existing master, the faster I can get the snapshot loaded on the slave, the faster the slave can catch up to the ongoing changes on the master and become available for reads. Of course, the additional load on the master during the parallel gzip has to be taken into account.

It might be interesting to combine pigz with mk-parallel-dump for quick test exports on quiescent systems, depending on how much mk-parallel-dump is already saturating the cores. mk-parallel-dump currently defaults to gzip.

I like pigz but could not find a statically compiled binary of it.

Problem is one our server still has outdated version of zlib so I can not build the pigz source.

Appreciate if you know where I can find one..

if your system’s zlib is too old … but you still need to build zlib from source.

Get a recent copy of zlib, build it, modify pigz Makefile CFLAGS line to something like this …

CFLAGS=-O3 -Wall -Wextra -I../zlib-1.2.5/ -L../zlib-1.2.5/

I used zlib-1.2.5, change the “..” ,of course, to full path of your zlib-1.2.X if necessary.

Note: https://bugs.launchpad.net/ubuntu/+source/pigz/+bug/538539