Like all sane web organizations, we gather metrics about our infrastructure and applications. As many metrics as we can, as often as we can. These metrics, given the right context, helps us figure out all sorts of things about our application, infrastructure, processes, and business. Things such as…

What:

…did we do before (historical trending, etc)

…is going on right now? (troubleshooting, health, etc.)

…is coming down the road (capacity planning, new feature adoption, etc.)

…can we do to make things better (business intelligence, user-behavior, etc.)

All of which, of course, should be considered mandatory in order to help your business increase its awesome. Yay metrics!

Some time ago, Matthias wrote great a blog post about some of the metrics that can reasonably profile the effectiveness of web operations, taken from the ITIL primer, VisibleOps.

In my opinion, there’s nothing on that list of things that isn’t valuable, as long as the cost of gathering those metrics isn’t too behaviorally, technically, or organizationally expensive. The topics included in that list of metrics and the context they live in is fodder for many, many blog posts.

But in the category of historical trending, I’m more and more fascinated by gathering what I’ll call “meta-metrics”, which is data about how you respond to the changes your system is experiencing.

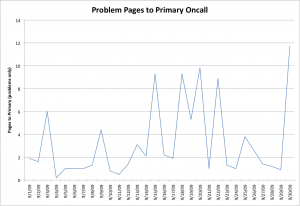

One of the best examples of this is gathering information about operational disruptions. Collecting information about how many times your on-call rotation was alerted/paged/woken-up, during what times, and for what service(s) can be enlightening to say the least. We’ve been tracking the volume of alerts a lot closer recently, and even with the level of automation we’ve got at Flickr, it’s still something you have to keep on top of, especially if you’re always finding new things to measure and alert on.

Now ideally, you have an alerting system that only communicates conditions that need resolvable action by a human. Which means every alert is critically important, and you’re not ignoring or dismissing any pages for any reasons that sound like “oh, that’s ok, that cluster always does that…it’ll clear up, I’ll just acknowledge the page so I can shut up nagios.” In other words, our goal is to have a zero-noise alerting system. Which means that all alerts are actionable, not ignorable, and require a human to troubleshoot or fix. Over time, you push as much of this work as you can to the robots. In the meantime, save humans for the yet-to-be-automated work, or the stuff that isn’t easily captured by robots.

Why is this important to us? I may be stating the obvious, but it’s because interrupting humans with alerts that don’t require action has a mental and physical context switching cost (especially if the guy on-call was sleeping), and it increases the likelihood of missing a truly critical page in a slew of non-critical ones.

Of course in the reality of evolving and growing web applications, even if we could reach a 100% noise-free alerting system, it’s impossible to sustain for any extended period of time, because your application, usage, and failure modes are constantly changing. So in the meantime, knowing how your alerts affect the team is a worthwhile thing to do for us. In fact, I think it’s so important that it’s worth collecting and displaying next to the rest of your metrics, and exposing these metrics to the entire dev and ops groups.

Something like this: (made-up numbers)

Gathering up info about these alerts should give us a better perspective on where we can improve. So, things like:

- How many critical alerts are sent on a daily/hourly/weekly basis?

- What does a time histogram of the alerts look like? Do you get more or less alerts during nighttime or non-peak hours?

- How much (if any) correlation is there between critical alerts and:

– code deploys?

– software upgrades?

– feature launches?

– open API abuse?

- What does a breakdown of the alerts look like, in terms of: host type, service type, and frequency of each in a given time period?

and maybe the most important ones:

- How many of those alerts aren’t actually critical or demand human attention?

- How many of them always self-recover?

- How many (and which) don’t matter in their role context (like, a single node in a load-balanced cluster) and could be turned into an aggregate check?

We’ve built our own stuff to track and analyze these things. My question to the community is: I’m not aware of any open-source tool that is dedicated to analyzing these metrics. Do they exist? Nagios obviously has host/hostgroup/cluster warning and critical histories, and those can be crunched to find critical alert statistics, but I’m not aware of any comprehensive crunching. Of course, until I find one, we’re just building our own.

Thoughts, lazyweb?

That is certainly a sore point. Lots (most?) places will alert for everything and anything without much consideration for the human component. What’s even worse if there is ever a major outage even more alerts will be added draining the human capital even further.

In the past I have mostly tried to identify those alerts that are truly critical ie. are worth of waking someone up however the idea of graphing them is an interesting one. I don’t believe there are any tools whether open source or commercial that are available for such a purpose. I’d definitely be interested in one.

At Six Apart we’ve put together a bunch of scripts that analyze the Nagios logs for alert notifications; we have a couple twiddles to only display critical alerts, or only nighttime alerts, or both. We get a nightly report with a breakdown of top alerts, broken down by host (and then by service within that host) and a breakdown by service (and then by host — useful for seeing if a check is broken or misconfigured, or if there was some more widespread problem).

It gives me a great view into not just what people are woken up for, but the general pulse of our systems overall. (I’d be happy to clean it up and share it with the world … perhaps I should.) And really, at some point, something that sits in a “warning” state for an entire weekend — never actually paging someone — is just as actionable and just as important as a false positive critical alert: it either needs to be silenced or it’s indicative of a real problem.

At Clickability we do something similar where we track and publish a monthly metric on “total alerts sent” and “number of night’s on-call was woken up”. Clearly the later is critical to keeping an operations team sane and happy. We’ve not gone down the path yet of further analyzing the results rather we spend our time making sure that every single alert is an actionable item that requires human intervention, If it does not we create automation or set it to not page.

I would love to be able to utilize an open source page that digs deeper in Nagios to understand alerts better. Thank you for suggesting it.

Thanks for the trackback, John!

It seems like we have all the fundamental tools for monitoring server problems (nagios), site outtages (pingdom) and release metrics (cruisecontrol). What we’re missing is your “meta-metrics” layer that provides another layer of abstraction on top of these. Performing some intelligent data-mining to give us some better insights into how we are _really_ doing operational wise.

I’ve got a damn Excel pivottable in my head for some reason : cruisecontrol release metrics vs. nagios alerts vs. pingdom warnings … now just add some Splunk trending to the mix. Very sexy tool indeed!

Pingback: Daily Links #111 | CloudKnow

@admob we have since the summer started mining nagios alert logs across different dimensions,

1. application [easily done in our env because we pointed them to different contactgroups]

2. colo

as with any of this data visualization [and good one at that] is a worthwhile investment and a has yielded for us the result that we do get to focus on things besides the high runners only.

great post and really like your take on the metrics as a way of doing the “showme” and leading to a better engineered system. thanks and keep up the good work.